Cloud Video Content Protection: 3 Proven Methods (2023)

Did you know that cloud video content consumption has jumped by 71% in the past year alone? With this rapid growth, protecting your video content is more paramount than ever. In fact, according to a recent study, 45 percent of businesses experienced a cloud data breach in 2022. Keep your company from becoming a statistic; immediately protect your valuable digital assets.

With the complete shift to online education in the post-epidemic era, the demand for short videos and film and television dramas has shown significant growth too.

The COVID-19 pandemic has significantly increased demand for online education and OTT (over-the-top) platforms, transforming how we learn and consume content. In addition, millions of new subscribers and billions of dollars in revenue are anticipated for the OTT market in the future.

During the coronavirus lockdown, online video consumption set new records. And now this trend is gaining momentum.

It is used to develop high-quality video services that viewers are willing to pay for; it is necessary not only to offer an unrivalled user experience but also to ensure that video distribution is comfortable and secure at the same time.

The Evolution of Content Protection

Mass video-on-demand (VoD) distribution began long before browsers could play secure video streams. Cable networks were the first to give access to content and face its protection. For them, protection mechanisms were created, which for 30 years have demonstrated a certain degree of reliability.

At that time, the main problem was that people could freely connect to cable networks and access content closed initially to them or available only by subscription. As a solution, set-top boxes with unique cards were released. They identified the user and decoded the encrypted signal.

The need to protect content on the Internet also only appeared after a while. At first, the network hosted only free video content. And large copyright holders still need to download it, fearing the lack of reliable protection systems. With the development of paid video streaming, the platform will organize access to it on its own.

Why do companies prefer CDN protection?

Today, almost any service that provides access to content uses CDN (Content Delivery Network). This geographically distributed infrastructure delivers fast content to web service users and sites.

“Teyuto's streaming security solutions protect VOD content from piracy and other illicit use, which can be crucial for content creators and distributors.”

The servers that are part of the CDN are located in such a way as to make the response time for service users minimal. This is often a third-party solution, and several providers (Multi-CDN) are often used simultaneously. In this case, any person with a link has access to the content hosted on the provider's nodes.

This is where the need to differentiate access rights to the content in a distributed and loosely coupled system arises, which, moreover, is open to everyone on the Internet.

Best Solution for protecting streaming video content

Unauthorized Access to Content

There are several options for accessing restricted content. The most popular methods can be divided into two categories.

At the authorization level

- Through the transfer of account data to third parties, these include relatives, friends, colleagues, and the audience of public communities and forums.

- Selling or leasing, where users resell or temporarily grant access to their accounts.

- Leaks and theft of account data.

At the level of content delivery

- Unauthorized video download: via a direct link from the page code or developer tools console; using browser plugins for downloading from video hosting sites; through the use of separate software (for example, VLC or FFmpeg);

- Screencast using both software and hardware.

If, at the authorization level, it is clear how to provide protection (for example, by blocking two or more simultaneous sessions from one user, using single sign-on (SSO), or monitoring suspicious activity), then problems may arise with the second option. Let's take a closer look at content protection itself.

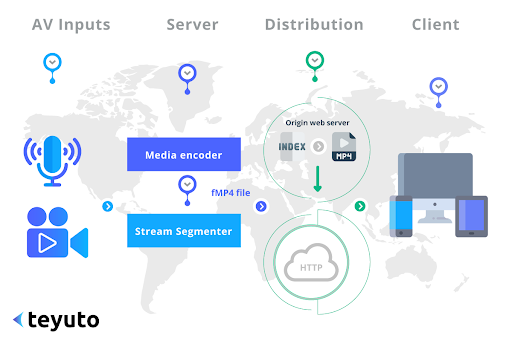

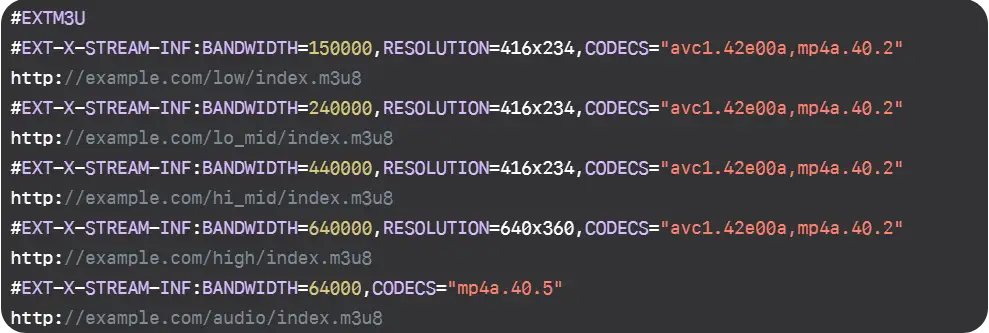

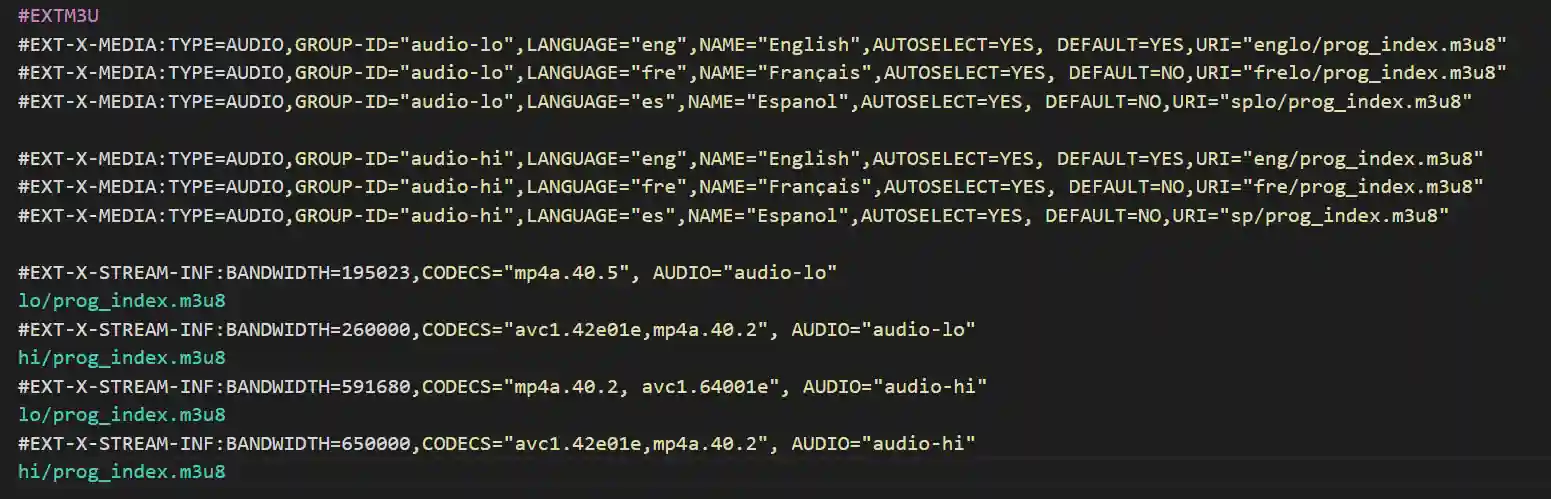

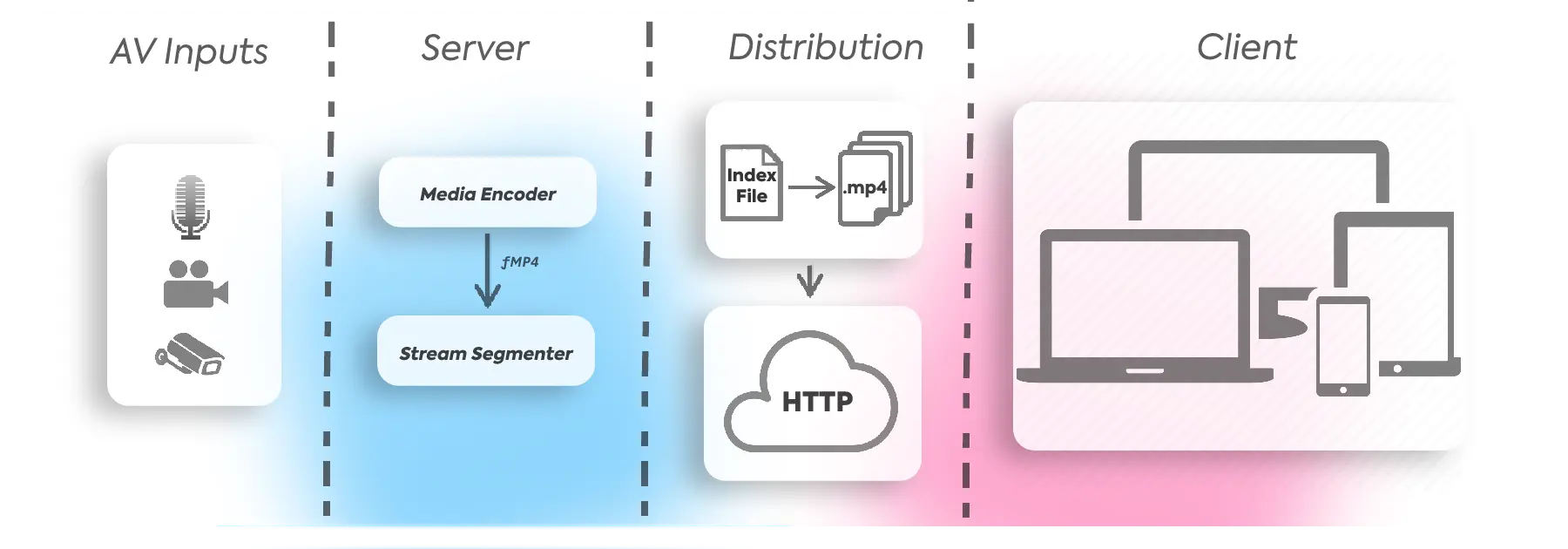

One of the well-known solutions for protecting streaming video content is HLS protocol encryption (HTTP Live Streaming). Apple called it HLS AES and proposed it for securely transferring media files over HTTP.

Encrypted video stream transmission

Encrypted transmission of video streams is a method to protect video content from illegal acquisition and tampering, usually through the following steps:

-

Select an encryption algorithm: Select a suitable encryption algorithm to encrypt the video stream to ensure the data cannot be stolen and decrypted during transmission. Currently, commonly used encryption algorithms are AES, RSA, etc.

-

Signed URLS: Signed URLs are a prevalent security measure for protecting online video content. The server generates a unique URI with a time-limited signature when a user requests access to a video, allowing the user to view the video for a limited time. After the time limit expires, the URL becomes invalid, and the user can no longer view the video.

-

Generate key: Generate an encryption key according to the selected encryption algorithm. The server usually completes this process, and the generated key will be distributed to the sender and receiver of the video stream.

-

Encrypted video stream: The sender encrypts the cloud video with an encryption algorithm and key to protect the video content from illegal acquisition.

-

Transmission of encrypted video stream: The encrypted video stream is transmitted through a secure channel, and protocols such as HTTPS and SSL can be used to ensure the security of the transmission process.

-

The decryption of the video stream: The receiving end uses the same encryption algorithm and key to decrypt the received video stream to restore the original video content.

Although encrypted transmission of video streams can protect the security of video content, it will also have a particular impact on transmission efficiency and delay. A trade-off needs to be made between security and efficiency.

At the same time, factors such as transmission quality, network bandwidth, and device performance need to be considered in practical applications. Subsequently, an appropriate encryption scheme should be selected comprehensively.

Three Giant DRM Technologies in Multimedia Industry

Three main DRM (Digital Rights Management) technologies have firmly taken positions in multimedia content copy protection technologies:

- Microsoft's PlayReady

- Google's Widevine

- Apple's Fairplay.

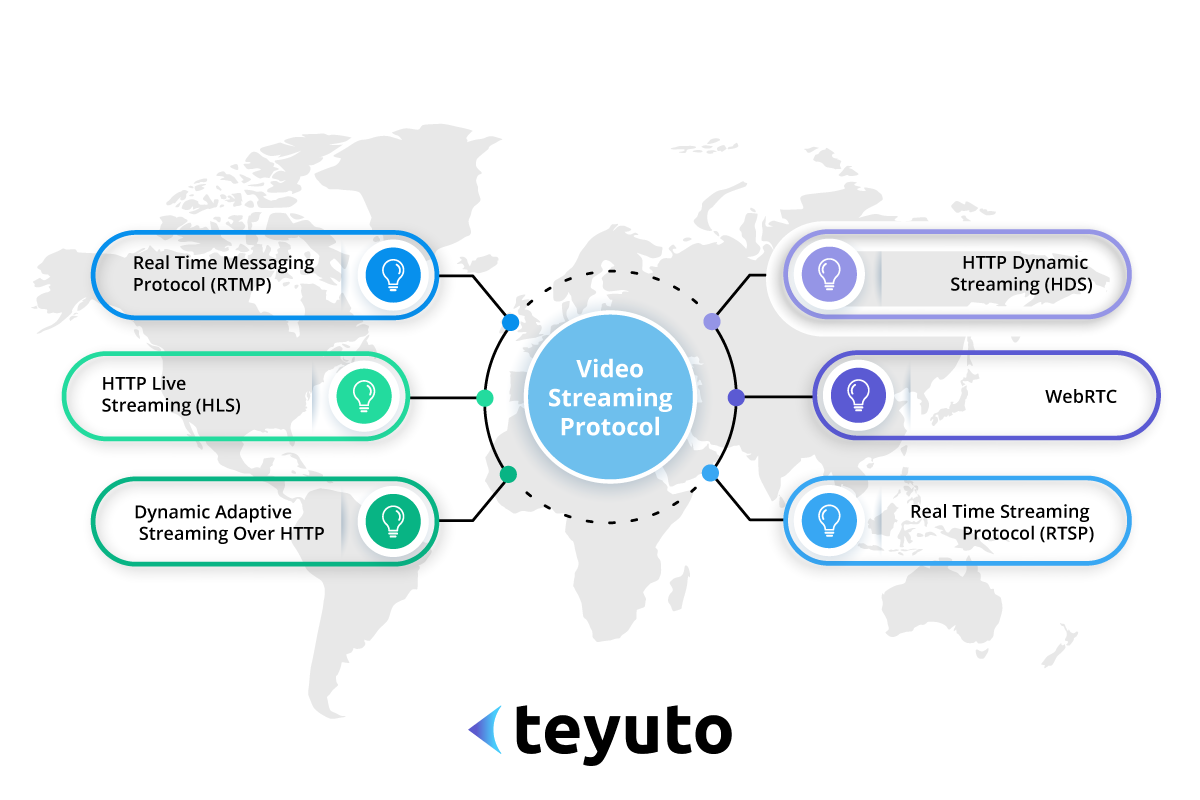

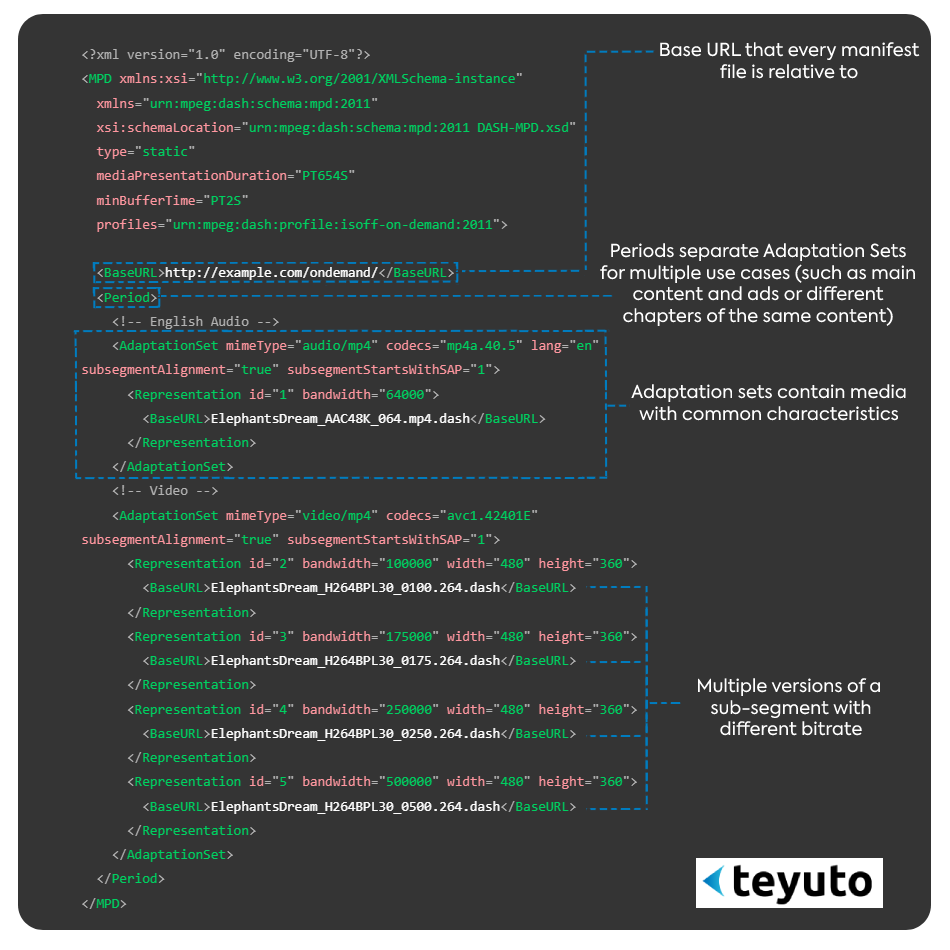

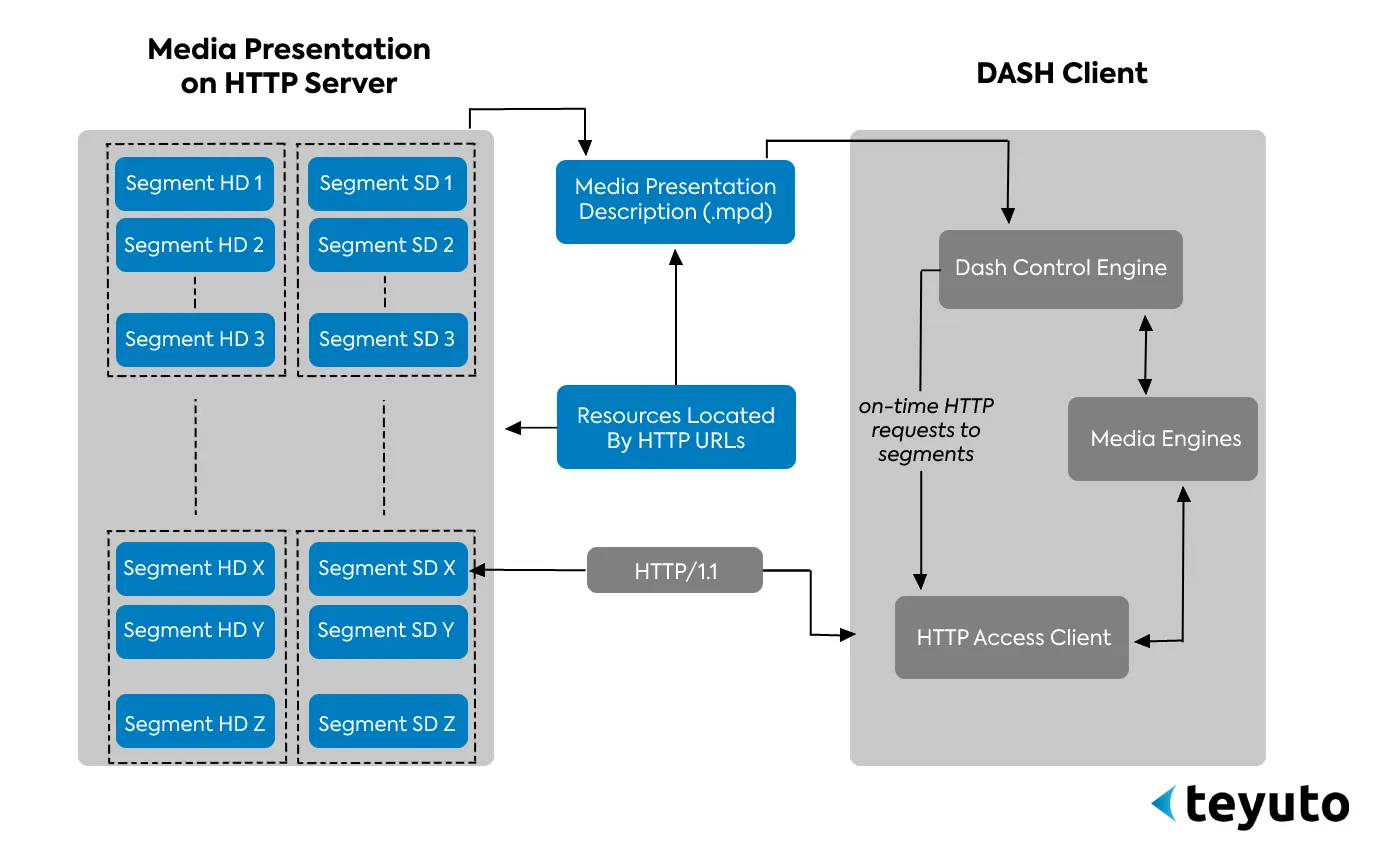

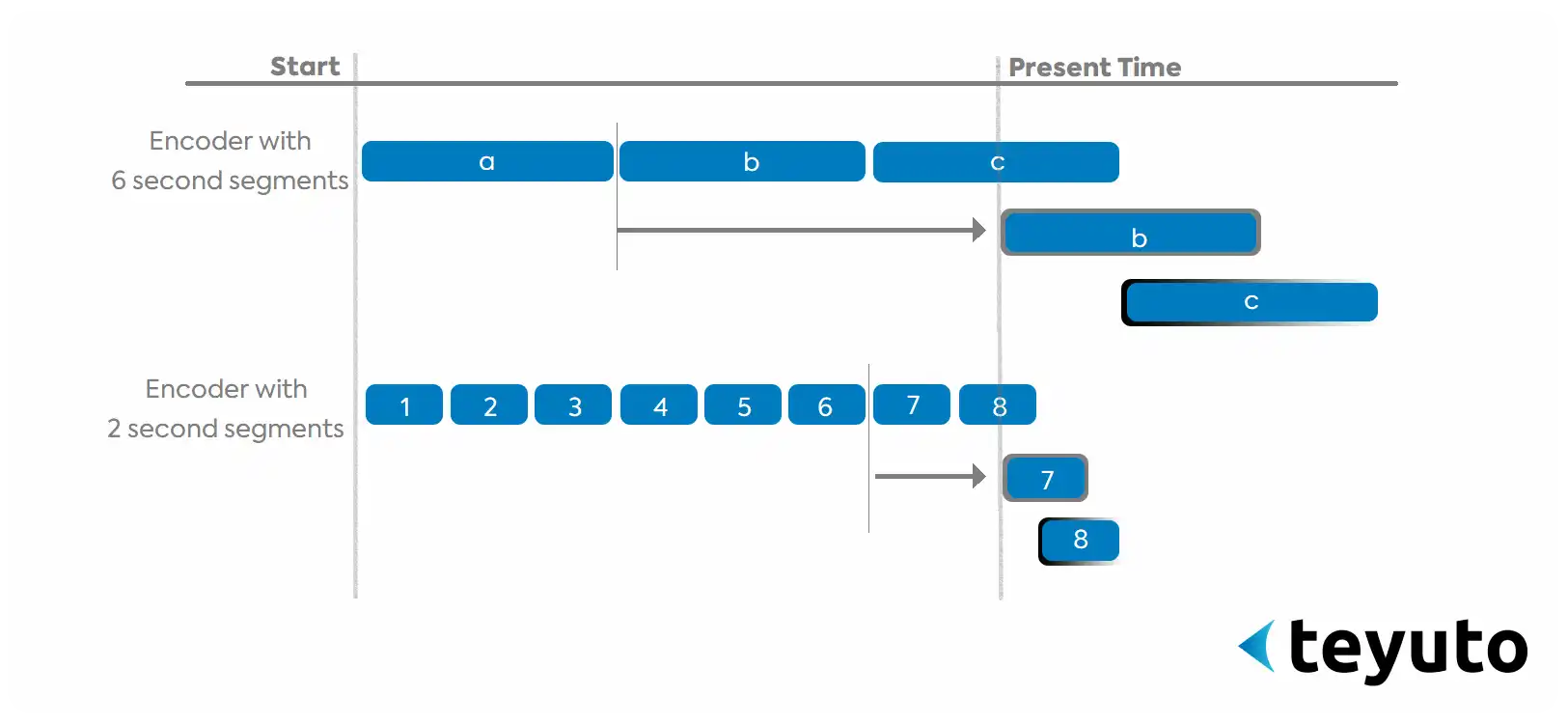

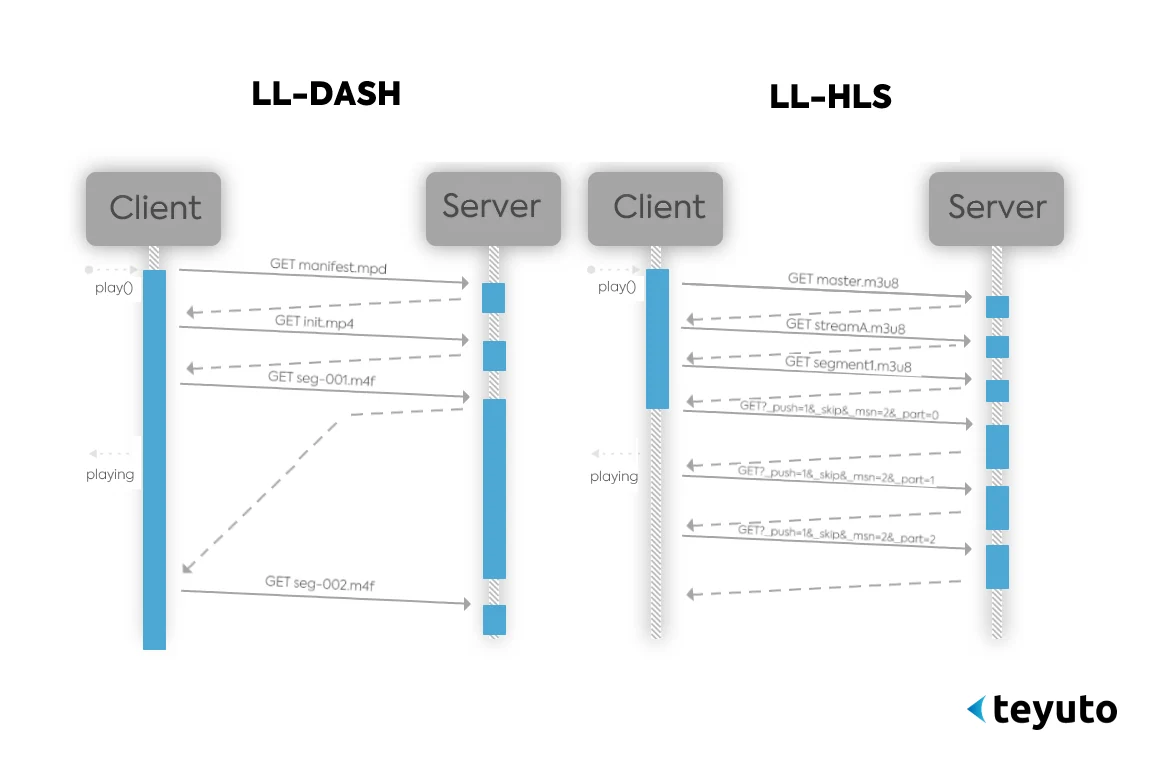

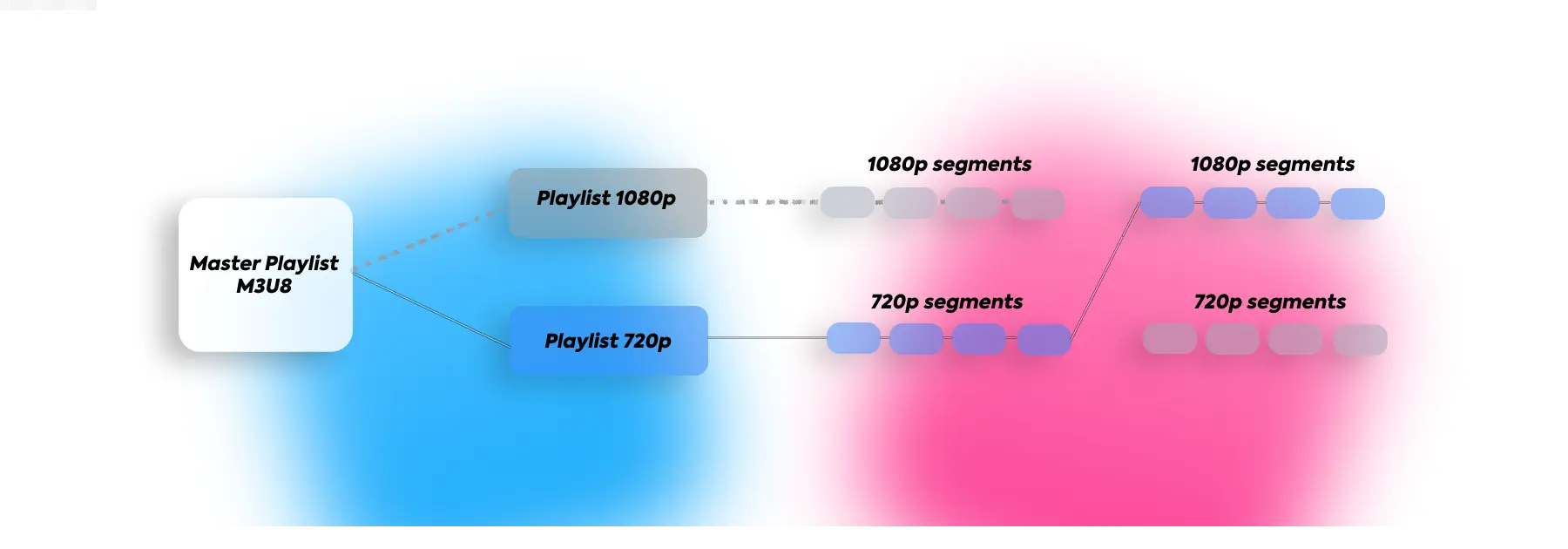

Two streaming protocols are widely used today. These are HLS, introduced by Apple in 2009, and the more recent MPEG-DASH, the first adaptive bitrate video streaming solution to achieve international standard status.

The coexistence of the two protocols and the increased need to play online videos in browsers have pushed for the unification of the content protection system.

Therefore, in September 2017, the World Wide Web Consortium (W3C) approved Encrypted Media Extensions (EME) - a specification for interacting browsers and content decryption modules based on five years of development by Netflix, Google, Apple, and Microsoft. EME provides the player with a standardized set of APIs for interacting with the CDM.

A complete DRM system and three whales of content encryption

Introducing DRM and authorization mechanisms is the most reliable way to protect against unauthorized access to video content. It is important to follow three recommendations to avoid compatibility issues and minimize holes.

Encrypt video content with multiple keys

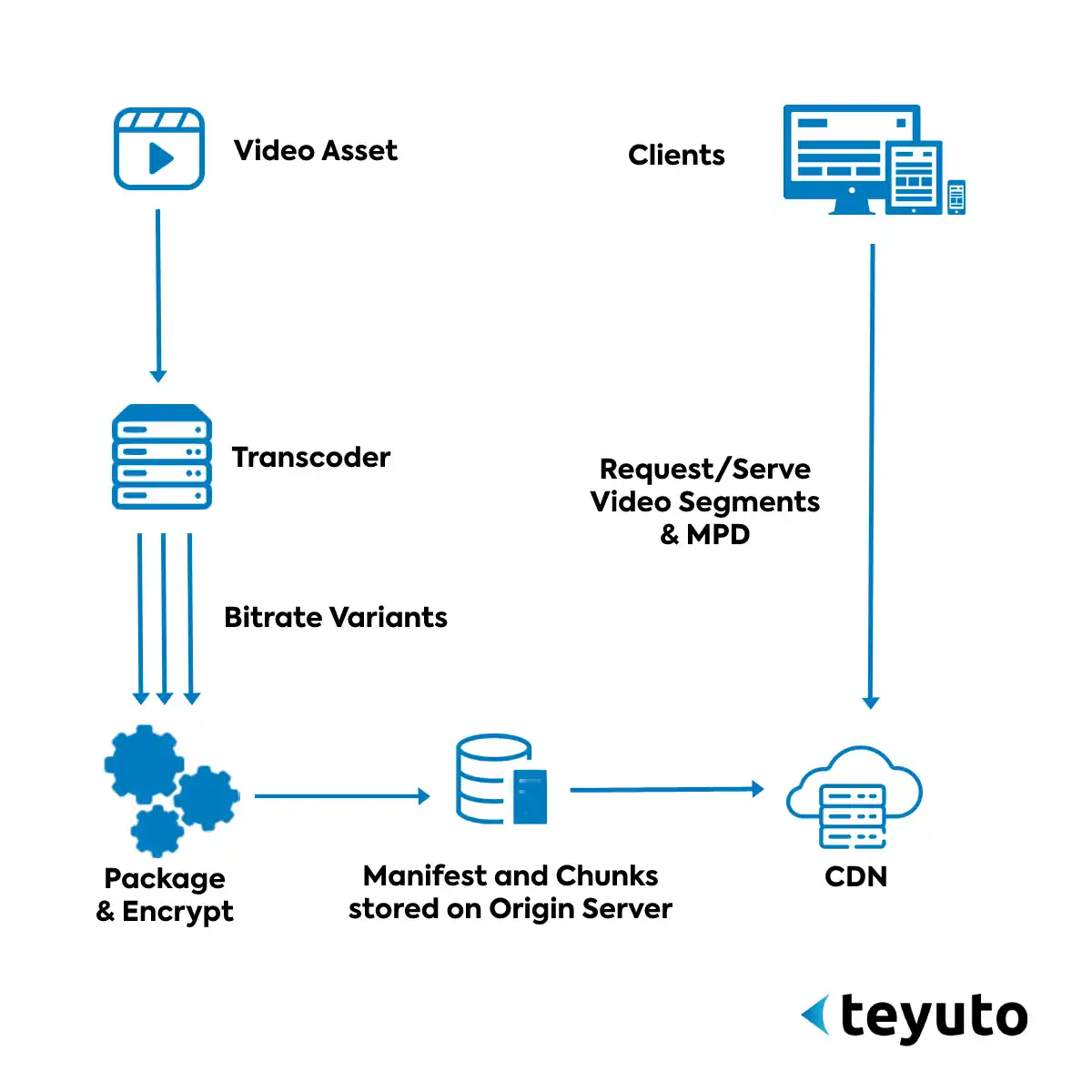

The original video file is divided into several small parts, each encrypted with a separate key. More is needed to decrypt content that can be freely intercepted from a CDN to obtain the keys. The device should request them.

In this case, the received keys are only suitable for some, but only for several video files. There are no changes on the user side: the player receives decryption keys as the user watches the video.

This is already enough so that the video cannot be downloaded in the most obvious ways for ordinary users, for example, through the VLC player, FFmpeg, or the corresponding extensions built into the browser.

Receive keys to decrypt content through the license server.

At a minimum, all requests to the license server must go over a secure HTTPS channel to prevent a MITM attack (key interception). As a maximum, in this case, it is worth using a one-time password (OTP, One Time Password).

Issuing keys upon request for ID content or the key could be better security. To restrict access, it is necessary to authorize the user on the site - to identify him by session ID. In this case, the transmitted with the ID content or key. This can be a session or any other identifier that will uniquely identify the user.

The license server requests information about whether there is access to the content for this token, and only in the case of a positive response give the encryption keys. Usually, the license server accesses your API and stores the response result in its session to reduce the load on the service.

Limit the lifetime of keys.

A non-persistent license is used, valid only within the current session. The user's device requests a license before each playback or as the video plays.

When using persistent keys, the user gets access to the content even when it has already been revoked (except for videos that are available offline).

Other ways to protect content

Protecting video content is not limited to encryption and introducing a DRM system. You can add more ways to them.

Overlay dynamic watermarks in the player or when transcoding video. This can be a company logo or a user ID by which you can identify him. The technology is not capable of preventing video content leakage but is psychological.

DNA coding, when the video is encoded in 4-5 different options, each user has their own sequence of options. This process can be divided into two parts. Initially, the video chain is generated by embedding characters in each frame of the original uncompressed content.

The frames are encoded and sent to the server for storage. Next, the user requests secure content from the provider, which associates a digital fingerprint with the client. It can be created in real-time or taken from a database containing a character string related to the video chains.

These symbols are used to create watermarked videos by switching between groups of images from video chains.

In a Nutshell

Safeguarding streaming video content prevents piracy, theft, and illegal access. Protecting streaming video:

DRM: Protect your video content with DRM. DRM prevents screen recording and other piracy.

Watermarking: Put a visible or invisible watermark on your video footage to identify the source and track illicit distribution.

Geoblocking: Geoblock your videos to specified nations or areas. This can block foreign users

Safe Video Hosting: Choose a platform with encrypted data transfer, multi-factor authentication, and frequent security updates.

Restricted Access: Require login credentials, access codes, or other authentication to restrict streaming video material to approved users.

Legal Protections: Secure copyrights, trademarks, and legal action against piracy and infringement to protect your streaming video material.

Summarizing

Security technologies are becoming more advanced without interfering with the user and simplifying the life of developers. Teyuto provides its users with signed URLs as a security measure. It ensures that only authorized users can access their videos and that their content is protected against unauthorized sharing and distribution by utilizing signed URLs.

Teyuto offers a comprehensive video streaming solution for businesses and individuals looking to broadcast their content to a larger audience. It provides encryption, digital rights management (DRM), and streaming privacy to protect VOD content.